Welcome to the future of digital storytelling!

AI-powered immersive storytelling and content personalization are revolutionizing

how we experience media.

These technologies allow creators to tailor content to individual preferences, making stories more engaging, relevant, and emotionally resonant. Whether you’re watching a show that adapts to your mood or reading an article that aligns with your interests, AI is reshaping the way we connect with content.

As a communications professional, I’m passionate about how these tools can be used ethically to enhance—not manipulate—audience engagement.

The Evolution of AI in Storytelling

The journey of AI in storytelling began with simple automation tools and has evolved into sophisticated systems capable of generating entire narratives. Early applications focused on data-driven personalization—like Netflix recommending shows based on viewing history. Today, platforms like ChatGPT and AI Dungeon allow users to co-create stories in real time.

According to Manovich (2020), “AI enables the creation of narratives that adapt in real-time to user behavior, transforming passive audiences into active participants.” Zhang and Wang (2023) further explain that generative AI models are now being used to craft emotionally intelligent content that responds to user feedback and preferences.

This evolution reflects a broader shift in media—from one-size-fits-all broadcasting to deeply personalized, interactive experiences. As these technologies continue to grow, so does the need for ethical standards that protect user privacy and prevent manipulation.

Latest insights in AI-powered storytelling

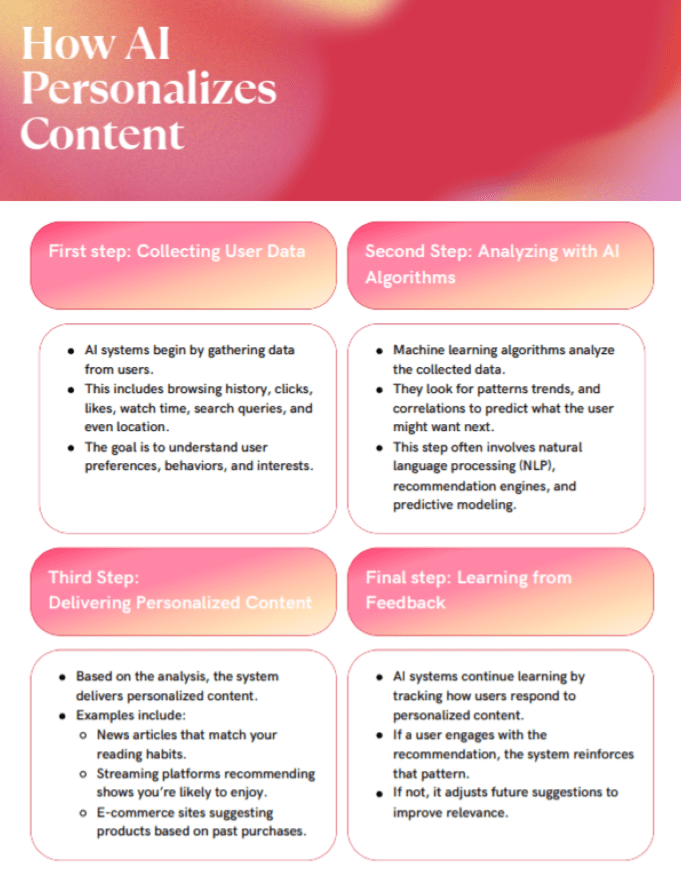

This visual illustrates the four key steps in how AI personalizes content—starting with user data collection and ending with a feedback loop that refines future recommendations.

Case Studies

- This first example shows how Spotify’s use of interactive design and personalized playlists is a prime example of how digital media platforms can enhance user engagement. As highlighted in Evans (2024), Spotify’s homepage adapts to user behavior, offering curated content that feels uniquely tailored. This approach demonstrates how personalization, when done ethically, can deepen user connection and improve content relevance.

- Brands from many industries are collaborating and leaning into how AI can help elevate their image and better cater to their customer’s needs. Data-driven insights are reshaping product research and development. The next great example of this would be the collaboration between L’Oreal cosmetics and IBM. All the details of this brand collab are discussed here in the Smart Talks with IBM podcast (Gladwell 2025).

- Marketers now have the opportunity to utilize AI companies specifically designed to help create campaigns and messages tailored to their brand’s needs and stories. This final example is from Storybrand.ai, which is demonstrated in a tutorial video below (YouTube, 2023).

AI in Storytelling: Opportunities and Challenges

Artificial intelligence is transforming the way stories are created and consumed. On the positive side, AI enables personalized experiences, making content more engaging and relevant to individual users (Zhang & wang, 2023). It also allows creators to scale their work efficiently, automate repetitive tasks, and experiment with new formats like interactive narratives and voice synthesis (Manovich, 2020).

However, these innovations come with challenges. AI-generated content can lack emotional nuance, and over-personalization may lead to echo chambers or manipulation (Zhang & Wang, 2023). Ethical concerns also arise around transparency, authorship, and data privacy (Journalism University, 2023). As communicators, it’s essential to balance innovation with responsibility, ensuring that storytelling remains authentic and inclusive.

How to Recognize Manipulative AI Content

As AI becomes more embedded in digital media, audiences must be equipped to recognize when content may be designed to manipulate rather than inform. According to Journalism University (2023), there are several red flags that can help identify manipulative AI-generated content:

- Over-personalized messaging that feels too tailored or emotionally charged (Zhang & Wang, 2023).

- Lack of transparency about whether content is AI-generated.

- Absence of credible sources or citations.

- Emotionally manipulative language without factual support.

By staying informed and critically evaluating digital content, audiences can better protect themselves from misleading narratives and engage with media more responsibly (Journalism University, 2023).

Molly Cavanaugh | Future Communications Profession | Passionate about ethical storytelling and digital media innovation.

Course | Knowledge & New Media 2025 – COM-510-11188-M01 | Professor Heather Kimball | October 11th, 2025

To support the ideas and insights shared above, the following sources were used and cited ethically to ensure accuracy and credibility.

Evans, D. (2024, September 25). 20 of the Best Interactive Websites. HubSpot. https://blog.hubspot.com/website/interactive-websites

Gladwell, M. (Host). (2025, July 15). L’Oréal and IBM: AI-powered beauty [Audio podcast episode]. In Smart Talks with IBM. iHeartRadio. https://www.iheart.com/podcast/1307-smart-talks-with-ibm-79842497/episode/loreal-and-ibm-ai-powered-beauty-285944471/

Journalism University. (2023). Ethical dilemmas in news media today. Retrieved from https://journalism.university/media-ethics-and-laws/ethical-dilemmas-in-news-media-today/

Manovich, L. (2020). AI and the future of storytelling. Retrieved from https://manovich.net

YouTube. (2023, July 15). Learn How To Use Storybrand.ai [Video]. YouTube. https://www.youtube.com/watch?v=du-lT5mQVws

Zhang, Y., & Wang, J. (2023). Generative AI-driven storytelling: A new era for marketing. arXiv. https://arxiv.org/pdf/2309.09048